Welcome to the second dispatch of AiOS* *Dispatch, your resource for the latest in AI, language models, IDE updates, and iOS development.

This week, we will talk about local models and some wild pricing rumors that might shake up the software development world.

512GB of Storage or RAM?

Apple dropped the new Mac Studio, and when I saw the 512GB of RAM limit on their latest M3 Ultra chip, I knew that they released a beast in the wild.

Why? Because we can run those chonky AI models locally. A 4-bit quantized DeepSeek R1 on just one single Studio. Want to run the unquantized model? Only two of them.

512GB HOLY FUCK DEEPSEEK R1 IN YOUR HOME

— Rudrank Riyam (@rudrankriyam) March 5, 2025

I have no plans to buy it as I am eyeing the new Sky Blue Air with 32GB RAM for travel, I am so bullish on Apple's hardware, unlike their clunky software.

I won't talk about the cost of this machine because if you can take advantage of it, you know it is well worth the price.

OpenAI's $10k/Month

Speaking of costs, let's talk about those OpenAI rumors floating around:

- $2,000/month for "high-income knowledge workers"

- $10,000/month for software developers

- $20,000/month for PhD-level research agents

You read that right. Ten grand a month for a developer tool. That's almost my salary worth of subscription. That is one Mac Studio, too.

If these rumors pan out, we are looking at different tiers of intelligence. I wonder who will cough up $10K a month. Big tech firms, maybe, or startups with more VC cash than they can handle.

Free Alternative to Cursor

Trae is an AI IDE by ByteDance’s Singapore-based subsidiary, SPRING PTE. As of this dispatch, it is available for free of cost with both Claude's 3.5 and 3.7 models and DeepSeek R1 and V3 models too.

Trae is a free alternate to Cursor

— Rudrank Riyam (@rudrankriyam) March 4, 2025

I tried it out, and while it is not a Cursor killer, it is useful if you are on a budget and do not want to pay for a subscription.

Their privacy policy and terms of service have been recently updated, so make sure you go through it once.

Signs of Actual Apple Intelligence

After so many workshops over months of using the Shortcuts app to imitate Apple Intelligence behavior, we may finally see the actual Apple Intelligence this month. The one that was shown back at WWDC 2024.

Apple Intelligence might be coming sooner than we think…

Apps can now securely tell Siri what's on-screen – the key functionality for Personal Context

— Matthew Cassinelli (@mattcassinelli) March 5, 2025

Matthew Cassinelli posted some new APIs in beta that help for apps to tell Siri what's on-screen – the key functionality for Personal Context.

Remember, if Siri can finally have a new era, so can you.

If Siri can have a new era, so can you.

— Rudrank Riyam (@rudrankriyam) July 30, 2024

On-Device Vision Intelligence

HuggingFace launched their new vision model called SmolVLM-2, which had day 0 support for MLX Swift:

Thrilled to release SmolVLM-2, our newest model, which runs entirely on-device and in realtime, offering a strong foundation for healthcare applications. 🤗🔥

With proper domain-specific training, small models enable offline inference of 2D (ECGs, CXRs) and even 3D modalities…

— Cyril Zakka, MD (@cyrilzakka) March 3, 2025

When they announced it, they added a waitlist for TestFlight (now open-sourced). As an impatient person, I decided to copy Apple's Visual Intelligence using SmolVLM-2 and open-sourced it:

GitHub - rudrankriyam/SmolLens: Implementation of Visual Intelligence Using SmolVLM 2 by Hugging FaceImplementation of Visual Intelligence Using SmolVLM 2 by Hugging Face - rudrankriyam/SmolLensGitHubrudrankriyam I am looking forward to the iPhone 17 Pro with 12 GB of RAM to run 7B/8B language models easily on it. By September, those models should be as powerful as the current 14B models!

MLX Supremacy

I am looking forward to the iPhone 17 Pro with 12 GB of RAM to run 7B/8B language models easily on it. By September, those models should be as powerful as the current 14B models!

MLX Supremacy

The new shiny framework MLX Swift humbles me to crave for the vast amount of knowledge out there in the AI world.

Also, I am noticing this trend of more AI providers making their models support MLX with their launch, and it is a big thing for the MLX community!

One distilled set of models that caught my eye was Llamba models. The interesting part is the graph for running it on the iPhone, especially for longer context:

5️⃣ Llamba on your phone! 📱

Unlike traditional LLMs, Llamba’s fewer recurrent layers make it ideal for resource-constrained devices.

✔ MLX implementation for Apple Silicon

✔ 4-bit quantization for mobile & edge deployment

✔ Ideal for privacy-first applications

— Aviv Bick (@avivbick) March 5, 2025

I am glad that this 8B model can run 40k context on an iPhone 16 Pro!

Qwen's QwQ-32B

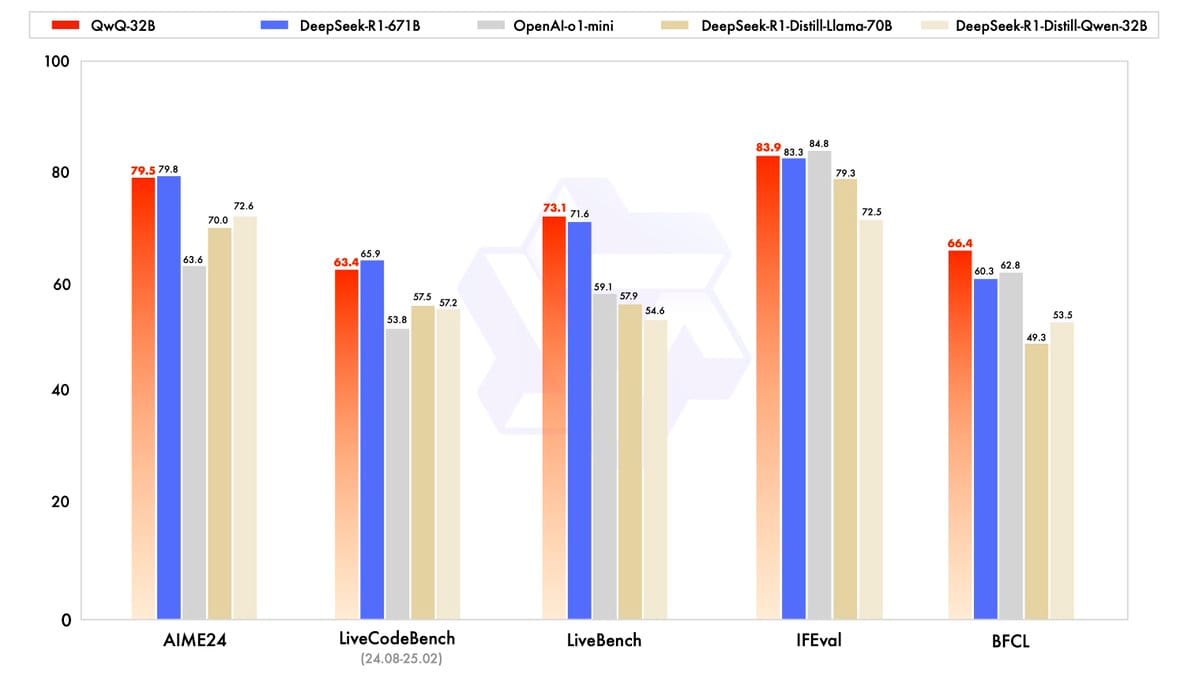

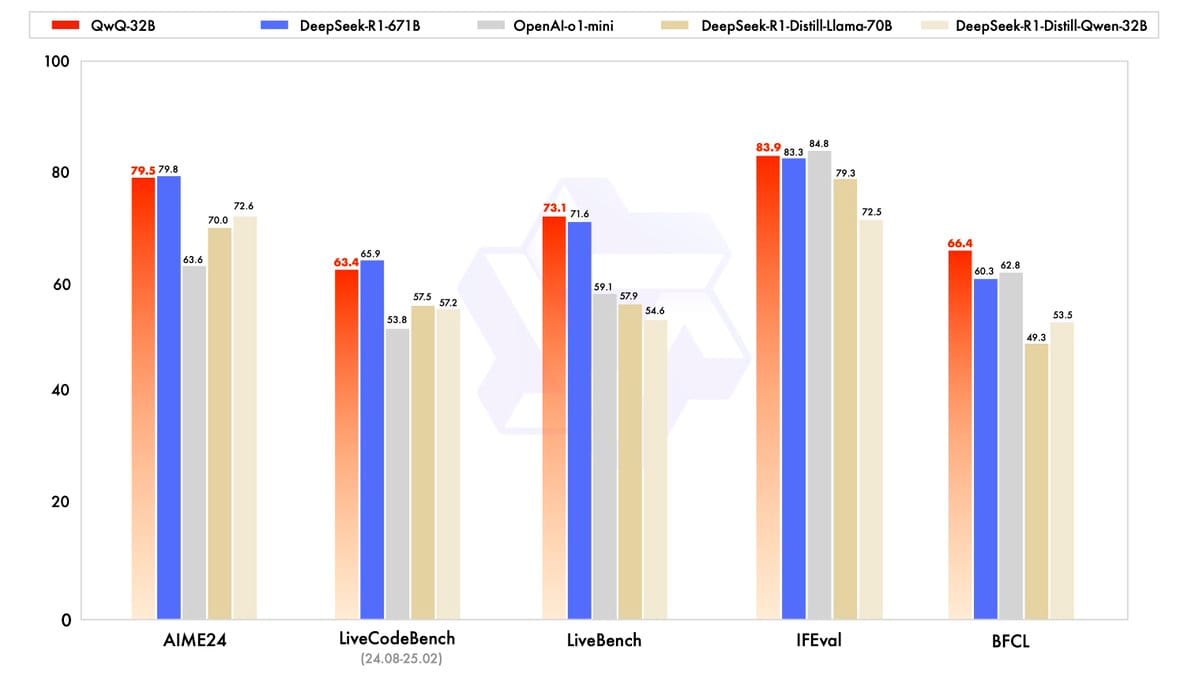

Talking about local models, (again) there is another model from China that is making the news- QwQ 32B taking on the Deep Seek R1 680B in benchmarks:

Again, you can run it on your MacBook using MLX:

QwQ-32B evals on par with Deep Seek R1 680B but runs fast on a laptop. Delivery accepted.

Again, you can run it on your MacBook using MLX:

QwQ-32B evals on par with Deep Seek R1 680B but runs fast on a laptop. Delivery accepted.

Here it is running nicely on a M4 Max with MLX. A snippet of its 8k token long thought process:

— Awni Hannun (@awnihannun) March 5, 2025

AI Tool of the Week: Pico AI Homelab

Pico AI Homelab LLM serverLightning Fast. Complete Privacy. Effortless Setup. Pico is the ideal LAN AI server for home and office use—designed for professionals and small teams. It offers key benefits: • Pico AI Homelab includes over 300 state-of-the-art models such as: • DeepSeek R1 • Meta Llama • Microsoft Phi 4 • Q…Mac App StoreStarling Protocol Inc Pico AI (non-sponsored) is a fast AI server for home and office. Built on MLX, you can use it for running QwQ 32B on your MacBook or DeepSeek R1 model on your new Mac Studio!

Pico AI (non-sponsored) is a fast AI server for home and office. Built on MLX, you can use it for running QwQ 32B on your MacBook or DeepSeek R1 model on your new Mac Studio!

Give it a try!

What's Next

The AI updates in a few weeks are more than what we are getting from Apple in months (and that is saying something). As my friend Thomas said:

Apple wants to make the perfect timeless software; they can't make software that evolves fast. They don't know how to do that. AI tooling is growing way too fast for Apple to release anything meaningful.

— Thomas Ricouard (@Dimillian) March 5, 2025

Happy responsible vibe coding!

I am looking forward to the iPhone 17 Pro with 12 GB of RAM to run 7B/8B language models easily on it. By September, those models should be as powerful as the current 14B models!

MLX Supremacy

I am looking forward to the iPhone 17 Pro with 12 GB of RAM to run 7B/8B language models easily on it. By September, those models should be as powerful as the current 14B models!

MLX Supremacy Again, you can run it on your MacBook using MLX:

QwQ-32B evals on par with Deep Seek R1 680B but runs fast on a laptop. Delivery accepted.

Again, you can run it on your MacBook using MLX:

QwQ-32B evals on par with Deep Seek R1 680B but runs fast on a laptop. Delivery accepted. Pico AI (non-sponsored) is a fast AI server for home and office. Built on MLX, you can use it for running QwQ 32B on your MacBook or DeepSeek R1 model on your new Mac Studio!

Pico AI (non-sponsored) is a fast AI server for home and office. Built on MLX, you can use it for running QwQ 32B on your MacBook or DeepSeek R1 model on your new Mac Studio!